CHAPTER 7

The Architecture of HBase

Component Parts

In a distributed deployment of HBase, there are four components of the architecture, which together constitute the HBase Server:

- Region Servers – compute nodes that host regions and provide access to data

- Master Server – coordinates the Region Servers and runs background jobs

- Zookeeper – contains shared configuration and notifies the Master about server failure

- Hadoop Distributed Files System (HDFS) – the storage layer, physically hosted among the Master and Region Servers

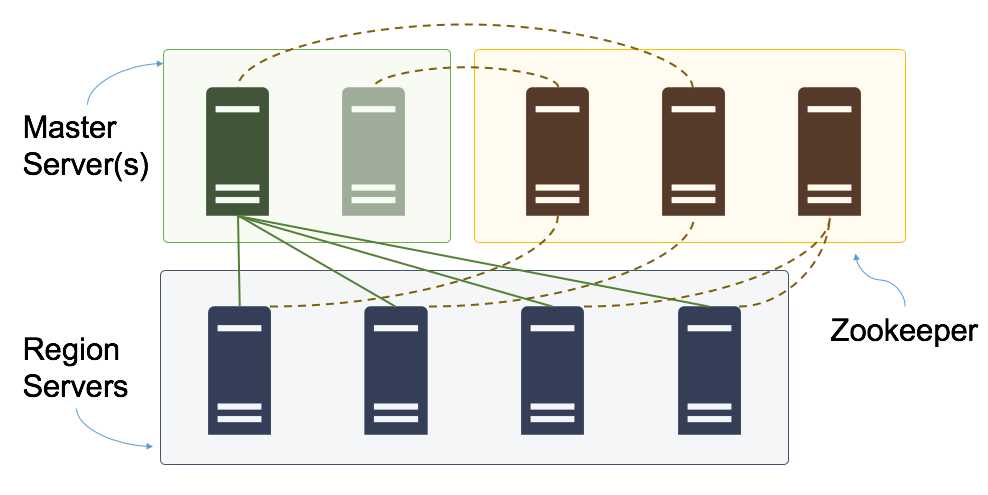

Figure 5 shows the relationship between the components in a distributed installation of HBase, where the components are running on multiple servers:

Figure 5: A Distributed HBase Cluster

Hosting each component on multiple servers provides resilience and performance in a production environment. As a preferred minimum, clusters should have two Master Servers, three Zookeeper nodes, and four Region Servers.

Note: Every node in an HBase cluster should have a dedicated role. Don’t try to run Zookeeper on the Region Servers—the performance and reliability of the whole cluster will degrade. Each node should be on a separate physical or virtual machine, all within the same physical or virtual network.

Running HBase in production doesn't necessarily require you to commission nine servers to run on-premise. You can spin up a managed HBase cluster in the cloud, and only pay for compute power when you need it.

Master Server

The Master Server (also called the "HMaster") is the administrator of the system. It owns the metadata, so it's the place where table changes are made, and it also manages the Region Servers.

When you make any administration changes in HBase, like creating or altering tables, it is done through the Master Server. The HBase Shell uses functionality from the Master, which is why you can't run the Shell remotely (although you can invoke HMaster functions from other remote interfaces).

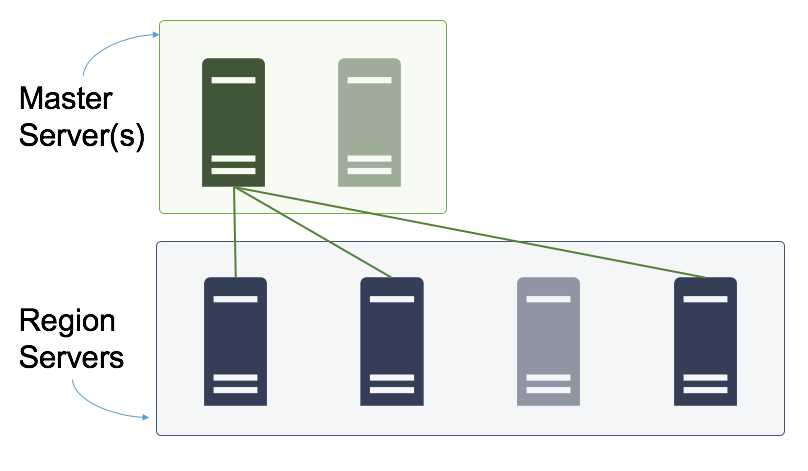

The Master Server listens for notifications from Zookeeper for a change in the connected state of any Region Servers. If a Region Server goes down, the Master re-allocates all the regions it was serving to other Region Servers, as shown in Figure 6:

Figure 6: Re-allocating Regions

When a Region Server comes online (either a lost server rejoins, or a new server in the cluster), it won't serve any regions until they are allocated to it. You can allocate regions between servers manually, but the Master periodically runs a load-balancer job, which distributes regions as evenly as it can among the active Region Servers.

There is only one active Master Server in a cluster. If there are additional servers for reliability, they run in active-passive mode. The passive server(s) listen for notifications from Zookeeper on the active Master's state, and if it goes down, another server takes over as the new Master.

You don't need several Master Servers in a cluster. Typically, two servers are adequate.

Client connections are not dependent on having a working Master Server. If all Master Servers are down, clients can still connect to Region Servers and access data, but the system will be in an insecure state and won't be able to react to any failure of the Region Servers.

Region Server

Region Servers host regions and make them available for client access. From the Region Server, clients can execute read and write requests, but not any metadata changes or administration functions.

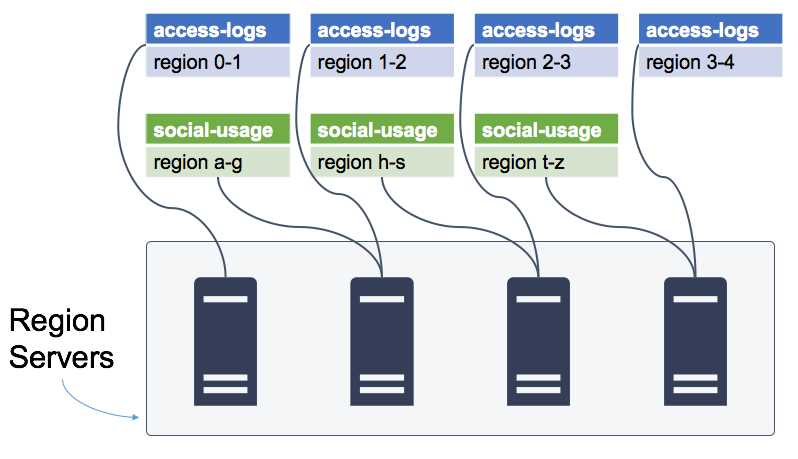

A single Region Server can host many regions from the same or different tables, as shown in Figure 7, and the Region Server is the unit of horizontal scale in HBase. To achieve greater performance, you can add more Region Servers and ensure there is a balanced split of regions across servers.

Figure 7: Regions Distributed Across Region Servers

Each region of a table is hosted by a singe Region Server, and client connections to other Region Servers will not be able to access the data for that region.

To find the right Region Server, clients query the HBase metadata table (called hbase:meta), which has a list of tables, regions, and the allocated Region Servers. The Master Server keeps the metadata table up-to-date when regions are allocated.

As we've seen, querying the HBase metadata to find a Region Server is usually abstracted in client code, so consumers work with higher-level concepts like tables and rows, and the client library takes care of finding the right Region Server and making the connection.

Region Servers are usually HDFS Data Nodes, and in a healthy HBase environment, every Region Server will host the data on local disks, for all the regions it serves. This is 100 percent data locality, and it means for every client request, the most a Region Server has to do is read from local disks.

When regions are moved between servers, then data locality degrades and there will be Region Servers that do not hold data for their regions locally, but need to request it from another Data Node across the network. Poor data locality means poor performance overall, but there is functionality to address that, which we'll cover in Chapter 9 Monitoring and Administering HBase,” when we look at HBase administration.

Zookeeper

Zookeeper is used for centralized configuration, and for notifications when servers in the cluster go offline or come online. HBase predominantly use ephemeral znodes in Zookeeper, storing state data used for coordination among the components; it isn't used as a store for HBase data.

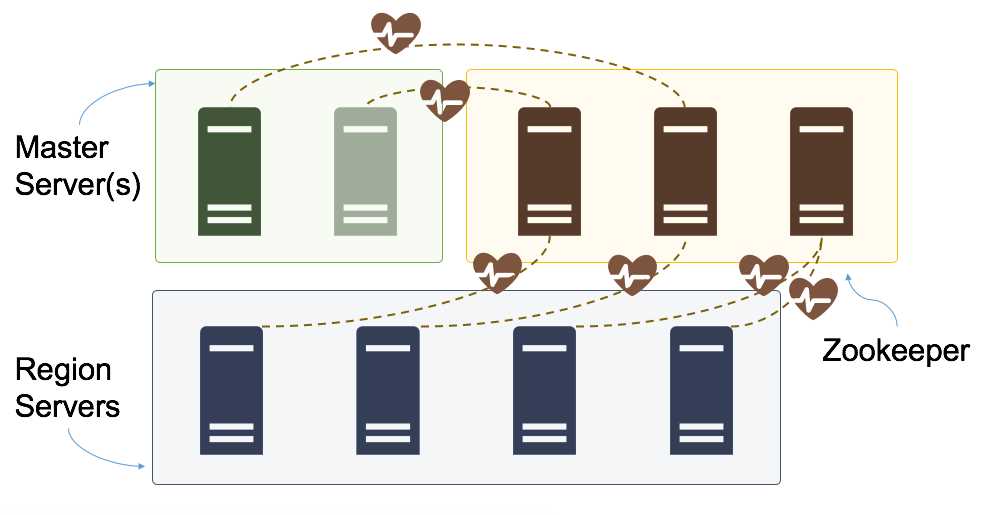

All the Master Servers and Region Servers use Zookeeper. Each server has a node, which is used to represent its heartbeat, as shown in Figure 8. If the server loses its connection with Zookeeper, the heartbeat stops, and after a period, the server is assumed to be unavailable.

Figure 8: Heartbeat Connections to Zookeeper

The active Master Server has a watch on all the Region Server heartbeat nodes, so it can react to servers going offline or coming online, and the passive Master Server has a watch on the active Master Server's heartbeat node, so it can react to the Master going offline.

Although Zookeeper isn't used as a permanent data store, its availability is critical for the healthy performance of the HBase cluster. As such, the Zookeeper ensemble should run across multiple servers, an odd number to maintain a majority when there are failures (a three-node ensemble can survive the loss of one server; a five-node ensemble can survive the loss of two).

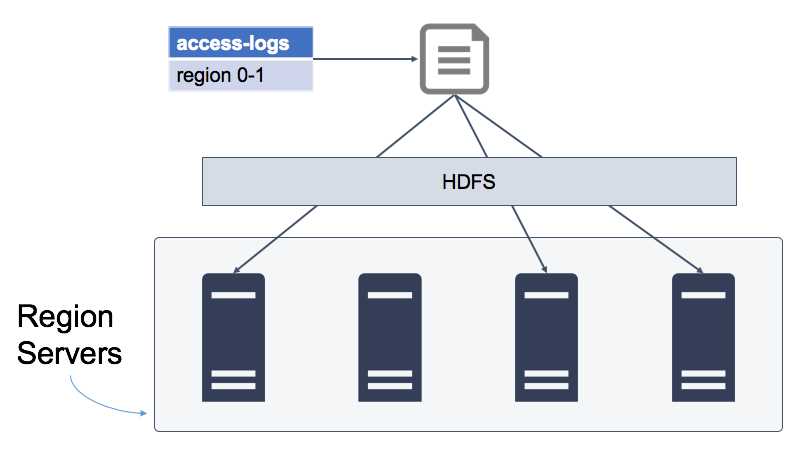

HDFS

A production HBase cluster is a Hadoop cluster, with the HDFS Name Node running on the Master Server and the Data Nodes running on the Region Servers.

The files which contain the data for a region and column family are stored in HDFS. You don't need a thorough understanding of HDFS to know where it fits with HBase. It's sufficient to say that data in HDFS is replicated three times among the Data Nodes, as shown in Figure 9. If one node suffers an outage, the data is still available from the other nodes.

Figure 9: HBase Data Stored in HDFS

HBase relies on HDFS for data integrity, so the storage parts of the Region Server are conceptually very simple. When a Region Server commits a change to disk, it assumes reliability from HDFS, and when it fetches data from disk, it assumes it will be available.

Summary

In this chapter, we walked through the architecture of a distributed deployment of HBase. Client interaction is usually with the Region Servers, which provide access to table regions and store the data on the cluster using HDFS. Scaling HBase to improve performance usually means adding more Region Servers and ensuring load is balanced between them.

Region Servers are managed by the Master Server, if servers go offline or come online the Master allocates regions among the active servers. The Master Server also owns database metadata, for all table changes. Typically, the HBase Master is deployed on two servers, running in active-passive formation.

Lastly there's Zookeeper which is used to coordinate between the other servers, for shared state, and to notify when servers go down so the cluster can repair itself. Zookeeper should run on an odd number of servers, with a minimum of three, for reliability.

In the next chapter we'll zone in on the Region Server, which is where most of the work gets done in HBase.

- 1800+ high-performance UI components.

- Includes popular controls such as Grid, Chart, Scheduler, and more.

- 24x5 unlimited support by developers.